June 24, 2020

At the time when global economies are closely monitored, industries are calibrating their future and individuals are upskilling, what should be the definition of ‘new normal’ for Businesses to visualize their real-time performance?

Not long ago, businesses were motivated to look upon investing in adoption of new technology concepts like Artificial Intelligence, Intelligent Automation, Predictive Analysis etc. But how many of these investments assured business continuity and unimpacted business performance? Only few.

In a recent blog by Phil Fersht (Founder & CEO, HFS research) on “Low-code or schmo-code? Don't monkey around with enhanced automation”, his discussion with Cyrus Semmence (Senior Researcher Analyst, HFS) on necessity of revisiting the visibility of what is rightly needed for business continuity gives an insight on previous ineffective technology investments due to lack of impact estimation by business.

The key message discussed in the blog was about how today the mega shift towards getting a digitalized business operation would need the balanced approach of technology adoption to drive optimisation and automation. Also, this still raises the old long waited business ask of driving the technology solutions to serve the business requirements and address the business impact. Hence, the outcome shouldn’t be narrowed by the platform capability to solve a section of a business problem rather it must scale to enhance the business performance and deliver the business outcome.

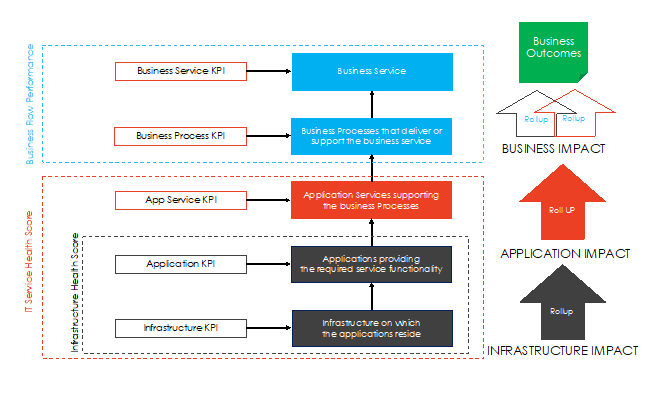

Business Performance is measured by certain key performance indexes (KPIs) that are defined as per the desired business outcomes. A business outcome is always a result of combined efforts of People, Process and Technology. Also, for a business leader it is essential that these KPIs are backed by real time performance data. And this drives any business leader to concentrate heavily on technology for steady stream of data and ends-up neglecting the role of overall functional process in this scenario resulting in heavy investments made on adoption technology platforms. Thus, including a low-code platform or RPA platform makes more sense post understanding the areas of impact.

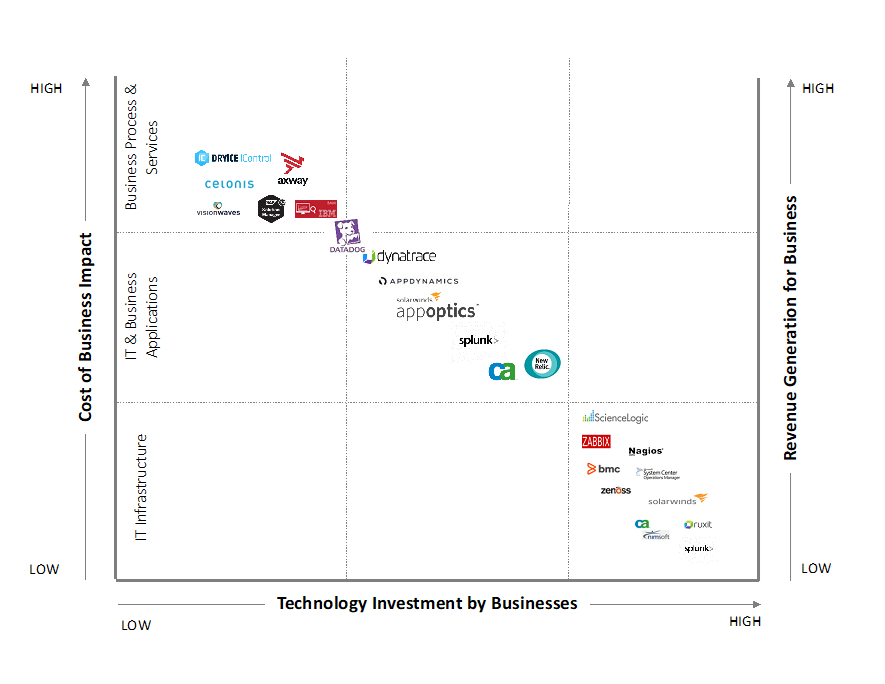

Referring to Phil’s previous blog “RPA died. Get over it. Now focus on designing processes that deliver superlative experiences” published in February 2020, it was very much clear that technology investments were being made heavily in RPA to deliver deskside task automation whereas the cost to operate a RPA platform never justified its return of value. Eventually the trend of businesses investing into technology could be understood as per Spend Vs Impact Vs Revenue generator graph (Figure 1). One may agree with Phil’s statement in his blog “The pieces of RPA that survive are the process orchestration tools (discover, design, automate and mine) that form part of what we see as the evolution towards "Intelligent Digital Workers" which augment human experiences and helps with real customer-to-employee intimacy.”

Even today, technology investments are highly driven based on frequency of resolution than the cost of impact, leading businesses to spend fire-fighting the technology issues rather than facilitating smooth business process or a service line. In simple words, the complexity within the IT or Technology world of any business today leads the Global IT department to fundamentally make majority of the technology investments irrespective of its relevance to generate revenue or cost of business impact. In an ideal scenario where the visibility to business is profoundly measuring the business health and highlighting areas of impact or even predicting the possible failures in delivery of any business service to its customer. Once this is realised, the technology spend would move towards areas that are high in revenue generation and cost impact.

Hereby ‘cost to resolve’ for a business from a critical aspect of technology adoption is dependent on three key pillars - Stability, Efficiency and Cost to Run. These also articulate the calculations for a business leader on their return of investment in technology solutions. One thing to note among these technology solutions is that they promote actions post the events have occurred, whereas promise is to prepare business leaders on upcoming challenges.

Stability is a key contributor in business continuity in its ‘as-is’ state, whereas efficiency improvement drives the profitability. Cost to run has a major goal on regular reduction while retaining the other two pillars. In order to be ready and well prepared for any business leader, it is necessary to understand and visualise the current state of their environment. But to ease the level of governance, businesses have bifurcated their overall service lines into small units of system operations that are interdependent to deliver a business outcome. All these small units operate in their defined operating models with specified and relevant SLAs or OLAs. On screening the outcomes delivered by each small unit, it is observed that the focus lies in their defined deliverable only. But then the question is who is looking at the bigger picture? Who is responsible to measure the business outcome? Who maintains the dependencies of the small units on overall business performance?

Today, problems like overhead, manual tasks or in-efficiencies are defined at micro level by these small units and thus are resolved with solutions to serve these micro-situations. The initiatives taken to deal with these micro level problems may be automation or even recommendations using predictive analysis to support decision making.

It is evident that businesses end up duplicating their investments on resolving these micro-situations which can or cannot be scaled. The risk of duplicating these investments become high and increment in ‘cost to resolve’ is unavoidable. But still no one is talking about how these costs/risk/inefficiencies are impacting the overall business outcomes. We definitely have experienced working with consultants with tribal knowledge to compare businesses with industry standards and design a risk framework on business impact. World is moving with hypersonic speed of data generation due rapid digitization projects resulting in more specific and pointed observations. The need is not only an industry standard framework, but a functional model backed by IT data to visualise real time behaviour of any business process which has direct or indirect impact on business outcomes.

Business Leaders interested in advancements in technology must qualify the problems on the scale of impact. Today, technology approaches the business problem by implementing lots of monitoring solutions but fails to communicate business impact proactively. These multiple monitoring systems collect, and store data based on technical KPIs and translate technical information.

Such technical insights are limiting the business owners to identify areas of optimisation and in return making businesses to spend highly on automation. But is the cost to automate justified while measuring return with respect to stability and efficiency? These answers would stay unknown until the real time business performance governance isn’t in place.

Not only this, but varying requirements for individual businesses e.g. maintenance of existing operational processes, support for legacy technology estates, requirements for non-intrusive monitoring, and commercial concerns on keeping processing and storage costs low means that a range of multiple tools will be deployed for any given customer.

Business leaders do not envisage a heterogeneous landscape of monitoring their business health rather they would want to retain the flexibility to use the right tool for the right job. So, in order to effectively link IT performance to business processes, there will be a continuous need to capture data from multiverse of sources, focusing on (a) common tools used for Application Performance Management/ Operations Intelligence e.g. ITRS Geneos, Splunk, Dynatrace, AppDynamics, or (b) common streaming and distributed storage frameworks e.g. Kafka, Hadoop, Elastic Stack.

This aggregation of Data would allow the business leaders to articulate the business sense by tagging the business KPIs backed with IT data to drive proactive impact analysis. And thus, it would lead to affirmed and conclusive decisions for business process optimisation and automation.

Pravesh Gosain

Pravesh Gosain heads the Offering Management for DRYiCE Intelligent Automation products and solutions, he also leads Outbound Product Management for DRYiCE Portfolio. He has 12 years of industry experience & has managed different technology products in Automation, AI, and Analytics domains. As one of the charter members of DRYiCE Software, he has contributed to pre-sales & business development for Tools & Cloud solutions as well. Pravesh holds a Master’s degree in Business Administration and a Bachelor’s degree in Electronics & Communication Engineering. He is also a Microsoft Certified Professional. Pravesh is recently recognized as one of the Founders of the Pragmatic Alumni Community for Global Product Management professionals.