August 26, 2020

Traditional IT operation is no more future-proof. Revolutionary technologies such as hybrid Cloud, multi-Cloud computing, containers, and microservices are bringing enhancements with gold data for decision analysis. Simultaneously, budget constraints make traditional operations scramble and rush to assure availability and performance.

To get through, a paradigm shift in IT operations is inevitable.

The ITOps Era

The term ITOps refers to Information Technology Operations. The major activities are provisioning, administration, and maintenance of applications and infrastructure resources together with technical support. Generally, operations run in the silo mode with a combination of engineers and tools, where the infrastructure and application operations are technically segmented for hassle-free management, maintenance, and budgeting. The logical segmentation is commonly referred to as “towers”, where towers are individually managed by dedicated engineers and tools.

There are some buzzing terms in the market, such as AIOps, Serverless, DevOps, and NoOps, and they are misnomers with plenty of interpretations because they are wide, non-specific, and more importantly, they are not interchangeable. Some misinterpretations are:

- AIOps is nothing but IT operations completely controlled by artificial intelligence or algorithms

- No servers in the Serverless model

- DevOps is nothing but combining development and operations teams

- No operations in the NoOps model

But no wonder, based on the enterprise maturity and transformation approach, they can produce different degrees of outcomes, but their ultimate common goals are:

- Reduce complexity in IT operations

- Embrace automation

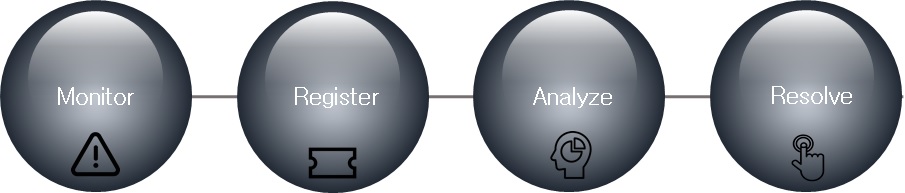

- Shorten time-to-resolution

- Improve efficiency

- Reduce cost

Let's see more about what is the magic (technology) behind these buzz terms, or rather, how to achieve it.

The AIOps Quest

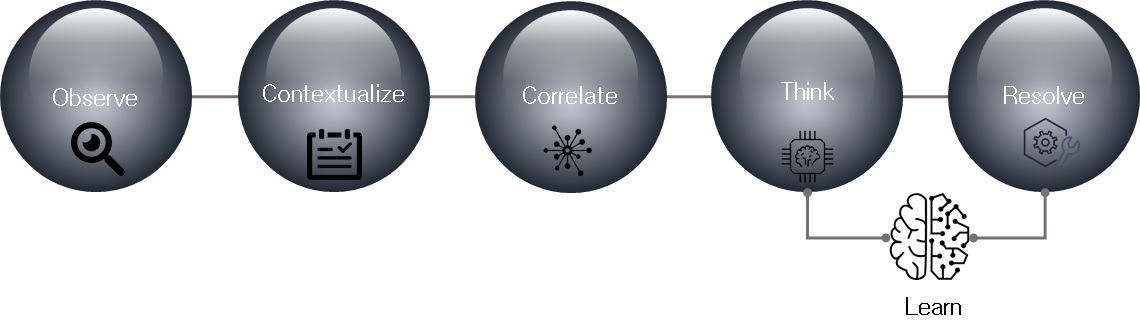

The bygone IT operations are continuously renovated to achieve AIOps state. AIOps is commonly referred to as Artificial Intelligence Operations or Algorithmic Operations. It helps the enterprise to clutch the capability of artificial intelligence for enabling advanced automation, reducing cost, and improving efficiency with the support of technologies like machine learning, big data, pattern recognition, deep learning, and neural nets. The advanced automation tied to AIOps, such as artificial intelligence-driven automated alert aggregation, event correlation, deduplication, contextualization, variance detection, situation analysis, root-cause analysis, knowledge discovery, predictive analytics, ticket creation, and remediation, becomes obtainable without human touch and hardcore scripting. What’s more? And what’s critical? The dream is to achieve high efficiency, quality, and sustainability and lift the operations from the traditional remediation cycle to zero-incident operation or at a minimum error-free and faster resolution.

Gartner says, AIOps platform maturity, IT skills, and operations maturity are the chief inhibitors to rapid time to value. Other emerging challenges for advanced deployments include data quality and lack of data science skills within I&O1.

Is the entire IT operation automated? Are the maintenance costs nullified? Can businesses run without any infrastructure downtime? Are human forces abstracted from IT Operations?

The answer is “NO.” So, where is the snag? The AIOps platform is built upon tools, technologies, and data, it takes time to learn and act, in the journey, the enterprise should realize that AIOps can bring maximum efficiency only when the gold data collected from multiple IT tools and processes are fed into the decision-making model in the right order, and the leftover portions that cannot be automated are supported by skilled engineers.

There is no silver bullet that is best in all situations to qualify data and classify the task for automation and humans, but there are certain cornerstones that should be considered to become successful:

- Managing structured, unstructured, and insufficient data

- Accuracy in prediction models

- Tools qualification

- The right degree of integrations

- Centralized data warehouse

- Outcome-based analytical model

This ideal AIOps state can be achieved with the golden triangle, people, process, and technology. This helps the enterprise to shift its focus from operations to outcomes.

DRYiCETM Software helps enterprises to meet successful AIOps. DRYiCE iAutomate & DRYiCE Lucy are built to solve the real-world IT problems on a day one. DRYiCE Software provides complete visibility and control to enterprise on how the AI-powered automation works and there is no limit for obtaining efficiency and efficacy. Finally, adopting AI-enabled DRYiCE software and realizing ROI will take only a few weeks.

The Serverless Model

Even in the serverless environment, servers exist. But owing to the core server management tasks such as deployment, allocation, installation, and patching, a third-party cloud service provider takes the overload to dynamically automate the activities.

The serverless state can be achieved via a two-pronged approach:

- Function as a Service (FaaS)

- Serverless architectures (serverless computing)

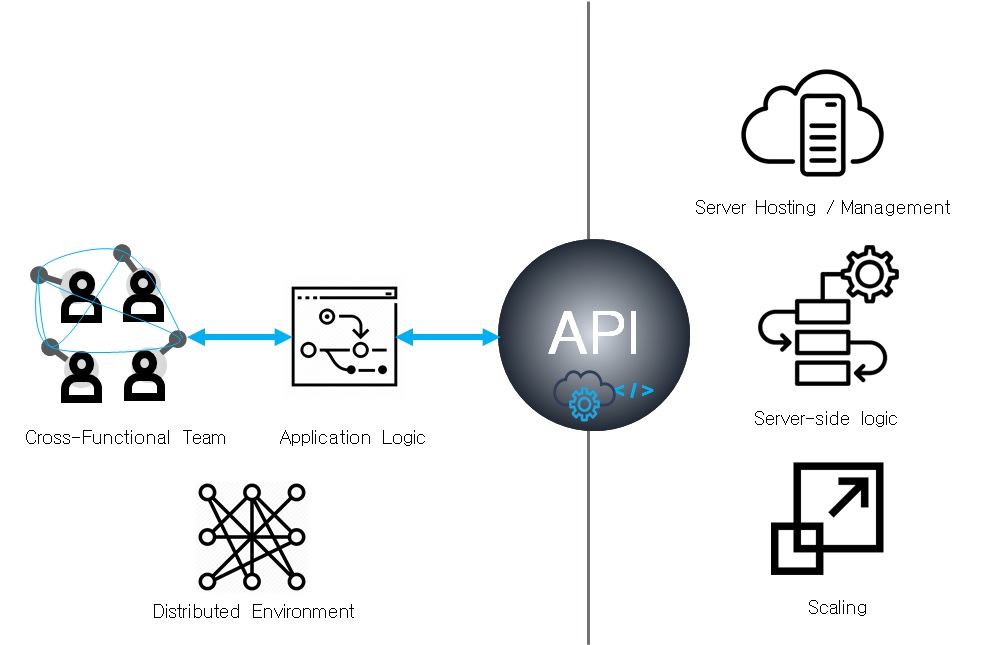

Several cloud service providers like AWS, Google Cloud, Azure, etc are leading the serverless architecture market with their FaaS offerings. FaaS is nothing but a category of cloud computing services that provides a platform as functions allowing engineers to develop, run, and manage application functionalities. It is on-demand, pay-per-use, flexible, lightweight, and in some places, comes with managed containers.

The functions are not self-managed, but it helps the enterprise to achieve a high degree of automation. This cloud-native approach enables engineers to invoke the cloud-native services and operational functions to write the application-specific codes, custom logic, or create back-end services and allow them to send it for deployment in isolated containers for high scalability, performance, and security. They don’t need to consider scaling, security patching, or the underlying operating system. In certain circumstances, generic infrastructure aspects under standard operations, like system authentication and authorization, are automated by Cloud service provider using external services instead of skilled engineers spending their time writing the same logic multiple times. This methodology can be a door opener for the NoOps state.

Serverless computing is an architectural design framework with software design patterns where the parallel systems are refactored to distributed fashion. In this architecture, multiple systems are connected via a local network or by a wide-area network to host different software components. They work together to accomplish a common goal, so it is easy to manage, scale with low latency, and high fault tolerance. Even though this design takes away some routine system management tasks, engineers are required to do some level of management/operations for the operating system, software processes, and distributed systems.

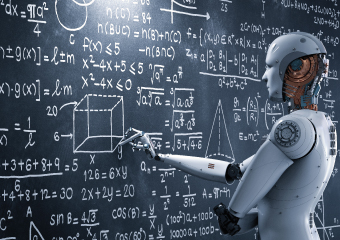

This integrated approach (FaaS and Serverless computing) enables the enterprise to enjoy automation rather than allocating their skilled engineers for traditional operations. This helps the enterprise to shift their focus from operations to outcomes:

- Highly scalable

- Low infrastructure and running costs

- Reduced application release cycle

- Low latency

- Reduced human error

- Endless scalability and high availability

- Faster time-to-market

The emerging serverless model is continuously developing in several aspects like monitoring, patching, migration, and cybersecurity among others. Netflix, Walmart, and Coca-Cola are great examples. In parallel, a range of mitigation measures and alternative approaches are rising. So, serverless computing should not be considered as a silver bullet for all development and operations problems.

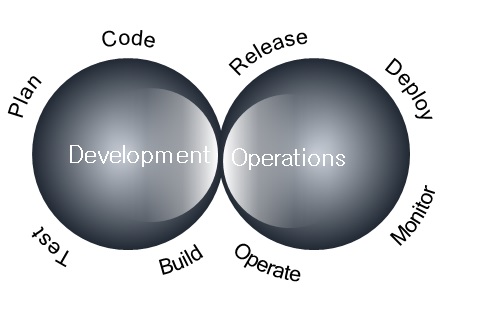

The DevOps Culture

More than technology, DevOps is a combination of cultural shifts, methodologies, and tools to deliver applications and services at high velocity. The cross-functional teams are created by uniting developers, operation engineers, and testers for agile, responsive, and data-driven delivery culture by taking end-to-end application life cycle ownership.

The culture doesn’t stop with the process, DevOps requires a wide range of tools and methodology such as Git, Jenkins, Docker, Kubernetes, Agile, and CI/CD pipeline to achieve the desired state.

The cross-functional team communicates frequently to plan, code, build, test, release, deploy, monitor, and operate. The cloud-native abstract functions, distributed environment, and DevOps methodology and tools enable the team to remove silos and effectively be involved in different aspects of the development process – from development to resource management to security to database management to support. The enterprise can replace some heavy lifting on-premise and self-managed operations like data center management with this approach.

The culture with a well-established cross-functional team, tools, and techniques enables the enterprise to enjoy automation rather than allocating and maintaining multiple engineering teams for traditional operations. This helps the enterprise to shift its focus from operations to outcomes.

- Speed

- Collaboration

- Performance

- Agility

- Scale

The DevOps culture is not the only recipe, even after the cross-functional team, there are several reasons for the enterprise sticking to standalone operations, and development teams such as dependencies with underlying enterprise architecture, political conflicts between operations and IT in managing tools, resource, training, operations, and cost.

The NoOps World

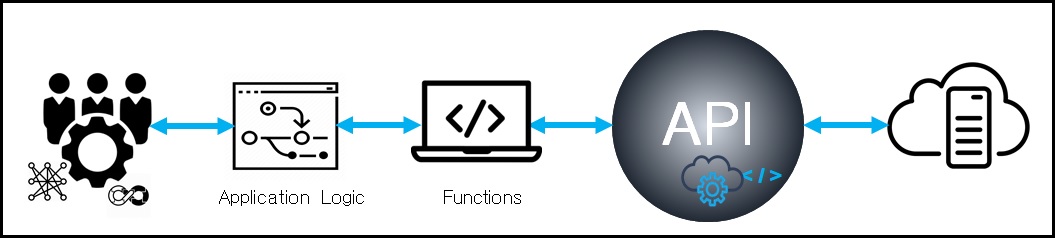

There are some operations in NoOps too. In an ideal NoOps environment, the IT operations are automated and abstracted to a certain extent from the underlying machines of cloud infrastructure. Only a small portion of the work is to be handled by cross-functional teams. They can narrow down their focus to playing with functions, developing new features, and manage the dynamic data layer.

The complete IT infrastructure in the cloud, high maturity in technologies, application development on serverless computing with distributed systems, and deployed DevOps cross-functional teams are mandatory to build a NoOps environment. There is a fine line between the serverless environment with DevOps and NoOps. On top of the serverless environment, the degree of collaboration between development and operations are considerably reduced using specific tools and technologies, this elite NoOps state cannot be achieved overnight, it may take several years with a proper transformation plan. Even many matured large enterprises are struggling to achieve 100% NoOps state.

What are the operations in NoOps? Beyond spinning instances, virtual machines, containers, providing functions for the enterprise to invoke, and accept application codes to auto-deploy, the cloud service providers are continuously going above and beyond, so for the engineers, it is vital to understand and continuously keep up their skills and update their knowledge to properly consume the services. The engineers should understand, how the service works, what is new, what is retired, what got changed, how secure it is when they should be used, and build some continuous improvement and best practices around the operations.

Again, parallel systems need to be identified and refactored to a distributed model for the NoOps environment. In most cases, the components of distributed systems are placed on a different network, which can communicate and coordinate their actions by passing messages to one another, so the engineers are required to oversee the operations. Beyond consuming FaaS, there are several downsides with distributed systems such as complexity in monitoring, security management, and so on. So, engineers are required to fill the automation gap and manage the operations around distributed systems.

In a nutshell, NoOps can remove most of the IT operations tasks from the shoulders of operations staff and allow them to focus on more critical tasks like continuous deployment, granular usage tracking, more testing, among others. Developers may benefit as well. NoOps frees up the chunk of time and energy that they currently dedicate to that last item in their release pipeline and develop cool new products and services. This helps the enterprise to shift its focus from operations to outcomes.

Although most of the operations are automated in NoOps, in terms of budgeting this model, it is not very efficient. Base operations like deployment, scaling, patching, network configuration, and security management are automated so the enterprise will lose control over scaling mechanisms. This leads to the baring of the hidden cost of using serverless computing and that maintaining an advanced cross-functional team is not simple. To avoid bottlenecks, it is mandatory to properly qualify and implement tools such as application performance monitoring, server management automation, security, and so on.

What is the paradigm shift?

There are several options to shift the focus from operations to outcomes, but there are pros and cons to every option. AIOps require maturity in qualifying tools, integrations, and consolidating data for decision-making. Even though the serverless model reduces the large portion of operations, it brings a new challenge to operations. Building DevOps cross-functional is not an overnight task such as coaching developers on IT operations and training IT operations teams on new technologies and advanced development languages. For NoOps, the enterprise needs extreme maturity and flexibility in business, IT operations, and development. IT needs to get buy-in from business and R&D, to perform a unique shift in IT operations. Yes, the large portion of the operations is removed, but it makes the entire infrastructure lean on the application logic.

The enterprises cannot fulfill the ever-growing modern applications need just with the traditional ITOps. By realizing the current state, culture, and budget, the right platform should be identified to transform the IT operations to the next level.

Sources:

- https://www.gartner.com/doc/reprints?id=1--1XRFSQ0G&ct=191113&st=sb&mkt_tok=eyJpIjoiTkRnMVltWTRaV1l4WTJZMiIsInQiOiJ4Z1FDWXc5bFRJ%E2%80%A6

Senthil Kumar

He is passionate and keen to remain knowledgeable about IT infrastructure, Artificial Intelligence (AI), Automation, Neural nets, Machine Learning (ML), Natural Language Processing (NLP), Image Processing, Client Computing, Blockchain, and Public/Private Clouds. His research interest circles around stack solutions to enterprise problems.

Kumar has a MS in Software Engineering (SE) along with several technical certifications like MIT’s Artificial Intelligence (AI), Machine Learning (ML- Information Classification), Service Oriented Architecture (SOA), Unified Modeling Language (UML), Enterprise Java Beans (EJB), Cloud and Blockchain, etc.